Richard Solomon

One of the most powerful features of PCI Express used in today's data centers is I/O virtualization. I/O virtualization allows virtual machines to directly access I/O hardware devices, improving the performance of enterprise-class servers. The Single Root I/O Virtualization (SR-IOV) specification has spurred the market. The SR-IOV specification allows a PCI Express device to appear as multiple different "virtual" devices in the host. This is achieved by adding a new PCI Express functional architecture to the traditional PCI Express functionality (ie, physical functionality). Entity functions control the creation and distribution of new virtualization features.

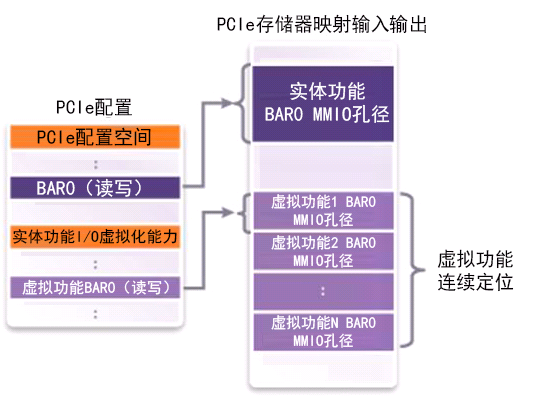

The virtualization function shares the underlying hardware and PCI Express links of the device (see Figure 1). The main feature of the SR-IOV specification is that the virtualization function is very lightweight and can be implemented on a single device.

Figure 1: When the data is still being moved using a PCIe interface, the virtualization function presents a separate view and configuration of the underlying hardware of the entity functions.

Virtualization requirements

System-on-chip architects often have to spend a lot of energy to determine how much virtualization functionality the device needs to provide. A single minimum virtualization function requires about 1,000 bytes of memory to meet the definition of lightweight. If each virtualization feature requires specific data center-oriented features, such as Advanced Error Reporting (AER) and Message Signal Interrupts (MSI/MSI-X), the required number of memory bytes can increase by three or four times. System-on-a-chip application logic needs to add logic to virtualize system-on-chip key-task functions, but this is not what this article discusses. At a minimum, system-on-chip architects should provide a virtualization capability for each virtual machine that the device expects to support.

Assume that there is an eight-core four-socket server—the system runs 32 virtual machines (one per core) with Hyper-Threading, and even 64 virtual machines (or more) are completely feasible. Some types of applications, such as high-end network designs or storage processors, further subdivide the system-level chip resources. It may make sense to have resources controlled by a single virtualization function. The virtual machine manager allocates system-level chip sizes of different sizes to each virtual machine by assigning an unequal amount of virtualized functions to each virtual machine. As a result, these types of device architectures can easily handle hundreds of virtualization features!

Determining the Right Storage Solution for Virtualization

PCIe configuration space is generally implemented on flip-flop-based registers. The reason for this arrangement is that the PCIe device can have six or so address decoders, various control bytes, a large number of error states, or other status bytes, all of which operate independently of each other.

The flip-flop-based address decoder minimizes latency, and the flip-flop-based control and status registers can be directly connected to the associated logic, simplifying the design engineer's job and helping to directly synthesize data. Unfortunately, with the proliferation of virtualization capabilities and the increased number of PCIe features per virtualization feature (especially with register-heavy features such as AER and MSI-X), the burden on the register gate can be significant. To add hundreds of full-featured virtualization features to PCIe, up to two or three million gates may be added to the product.

Because the SR-IOV specification supports more than 64,000 virtualization features in a single device, the PCI-SIG has put a lot of effort into supporting installation startup rather than directly mapping to triggers. Whenever possible, the control and status functions of all virtualization functions are unified with the related physical functions.

All PCI Express link layer controls fall into this category because a virtualization feature should not take away links that are shared with other virtualization functions. Only the various controls that must be executed individually for each virtualization function (such as enabling bus master) are copied. Address coding is greatly simplified by the continuous positioning of replicas of all virtualized functions within an entity's functional area—so no matter how many virtualization features are available, only two decoders per zone need to be added instead of each additional one. A decoder is added to the virtualization function (see Figure 2). Because of this, most memories of a virtualization function are slow compared to the access time of the same clock cycle mapped directly to the flip-flop and have a long delay.

Figure 2: Because of the continuous positioning of the virtualized functional memory area, the memory address decoding of the virtualization function is greatly simplified.

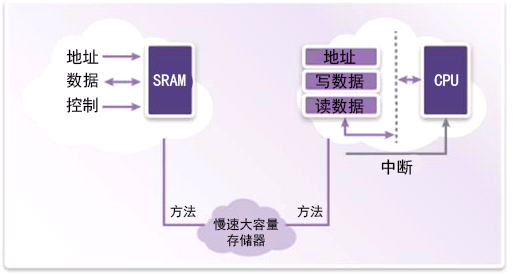

PCI Express controller design engineers carefully isolate the small amount of memory (such as flip-flops) that each virtualization function delays quickly, and cleverly add some logic to merge data from slower mass storage, in some cases You will want to merge the data of the entity functions (see Figure 3). This will naturally increase the system read and write latency of the device configuration space. However, the initialization will complete the access to those registers and will not affect the performance of the device that performs the input and output.

Figure 3: The slower mass storage data is merged with the underlying entity feature information to enable the memory of the PCI Express controller external virtualization feature configuration space.

Regardless of whether the configuration space is a trigger or a slow memory, the SR-IOV device main memory address space can be accessed at the same speed. The configuration space can be accessed only when the host first initializes the device and handles link layer errors. VM operations are suspended at other times. Also note that the SR-IOV specification assumes that the virtual machine manager will limit and intercept virtual machine access to each configuration space, so even if it only adds dozens of clock cycles to the hardware, it is nominally subject to the entire system configuration space. Access time effect.

On-chip memory and CPU-based memory contrast

Because the configured access speed is not critical to the performance of the device, the design engineer of the PCI Express controller may place the large-capacity memory in the on-chip static random access memory (SRAM), or even outside the off-chip dedicated memory, such as DDR memory. Because of the high density of silicon processing now available, a very small silicon area can achieve a relatively large SRAM - this is of course compared to the millions of gates needed to install trigger-based registers for thousands of virtual devices. . System-on-chip design engineers facing a lot of virtualization capabilities should be excited about this, but despite this, there is still a considerable amount of physical memory dedicated to SR-IOV. This does not waste memory when the system does not require the use of all of the virtualization features provided by the device, or when a silicon chip is used for a variety of different products.

Some system-on-chip architectures may use dedicated memory that is easily partitioned to change usage so that underlying application logic uses SR-IOV-unneeded memory. In other architectures, although the system-on-a-chip architecture may wish to consider whether it can offload large-capacity memory to the local CPU through the interrupt mechanism (see Figure 4). In this case, the PCI Express controller will display read and write requests for mass storage (actually served by the local CPU, data or acknowledgements returned to the controller hardware). Because the system-level chip firmware can choose how much CPU memory to allocate without having to query the memory of other hardware or multiple ports, waste is avoided and the RAM partitioning mechanism is very straightforward. Since most of the virtualization features can be executed in firmware, devices using this method also have the flexibility to support PCIe functionality. Hardware can support different SKUs through different firmware builds or just through different configurations. It has a different number of virtualization features and can support or not support AER and MSI-X.

Figure 4: Large-capacity sensors can be installed directly in the on-chip memory, or indirectly through the local CPU to provide read and write requests.

summary

System-on-a-chip architecture and PCI Express controller design engineers should evaluate the total amount of virtualization functionality they need, depending on the total and silicon area limits. It is appropriate to use flip-flops, SRAM, or CPU-based memory. Synopsys offers PCI Express's silicon-proven DesignWare IP solution, which is compatible with the latest PCI-SIG and SR-IOV specifications, and uses a combination of triggers, RAM, and local CPUs to flexibly install thousands of virtualization features. . DesignWare PCI Express IP's SR-IOV implementation can be configured and extended to enable design engineers to accelerate the time to market.

210W Medical Power Supply,210W Medical Device Power Supply,210W Medical Power Adapter,210W Rade Power Supplies

Shenzhen Longxc Power Supply Co., Ltd , https://www.longxcpower.com