Nginx is a lightweight web server/reverse proxy server and email (IMAP/POP3) proxy server that is released under a BSD-like protocol. It is characterized by less memory and strong concurrent power. In fact, the concurrency capability of nginx does perform well in the same type of web server. Users of nginx websites in mainland China are: Baidu, Jingdong, Sina, NetEase, Tencent, Taobao, etc.

Why use Nginx?

At present, Nginx's main competitor is Apache. Here, Xiaobian makes a simple comparison between the two to help you better understand the advantages of Nginx.

1. As a web server:

Compared to Apache, Nginx uses fewer resources, supports more concurrent connections, and achieves higher efficiency, which makes Nginx especially popular with web hosting providers. In the case of high connectivity concurrency, Nginx is a good alternative to the Apache server: Nginx is one of the software platforms that the bosses of the web hosting business often choose in the United States, able to support up to 50,000 concurrent connections, thanks to Nginx. We chose epoll and kqueue as the development model.

Nginx acts as a load balancing server: Nginx can directly support Rails and PHP programs internally, or it can serve as an HTTP proxy server. Nginx is written in C, which is much better than Perlbal in terms of system resource overhead and CPU usage efficiency.

2, Nginx configuration is simple, Apache complex:

Nginx startup is especially easy, and it can run almost 7*24 without stopping. Even if it is running for several months, you don't need to reboot. You can also upgrade the software version without interruption.

Nginx static processing performance is more than 3 times higher than Apache. Apache support for PHP is relatively simple, Nginx needs to be used with other backends, and Apache has more components than Nginx.

3. The core difference is:

Apache is a synchronous multi-process model, one connection corresponds to one process; nginx is asynchronous, and multiple connections (10,000 levels) can correspond to one process.

4. The areas of expertise of the two are:

The advantage of nginx is to handle static requests, cpu memory usage is low, apache is suitable for processing dynamic requests, so now the general front end uses nginx as a reverse proxy to resist the pressure, apache as a backend to handle dynamic requests.

Basic use of Nginx

System platform: CentOS release 6.6 (Final) 64-bit.

First, install the compiler tools and library files

Second, you must first install PCRE

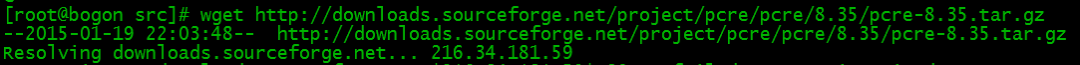

1. The role of PCRE is to let Nginx support the Rewrite function. Download the PCRE installation package.

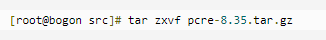

2. Unzip the installation package:

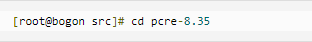

3, enter the installation package directory

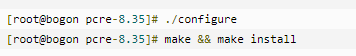

4, compile and install

5, view the pcre version

Third, install Nginx

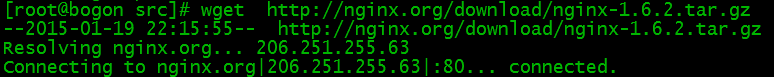

1. Download Nginx.

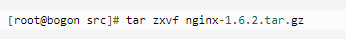

2, extract the installation package

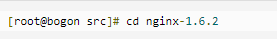

3, enter the installation package directory

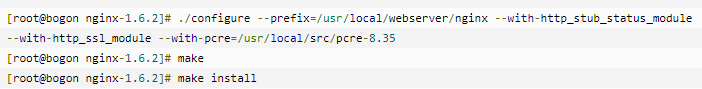

4, compile and install

5, view the nginx version

At this point, the nginx installation is complete.

Fourth, Nginx configuration

Create a user for Nginx to run www:

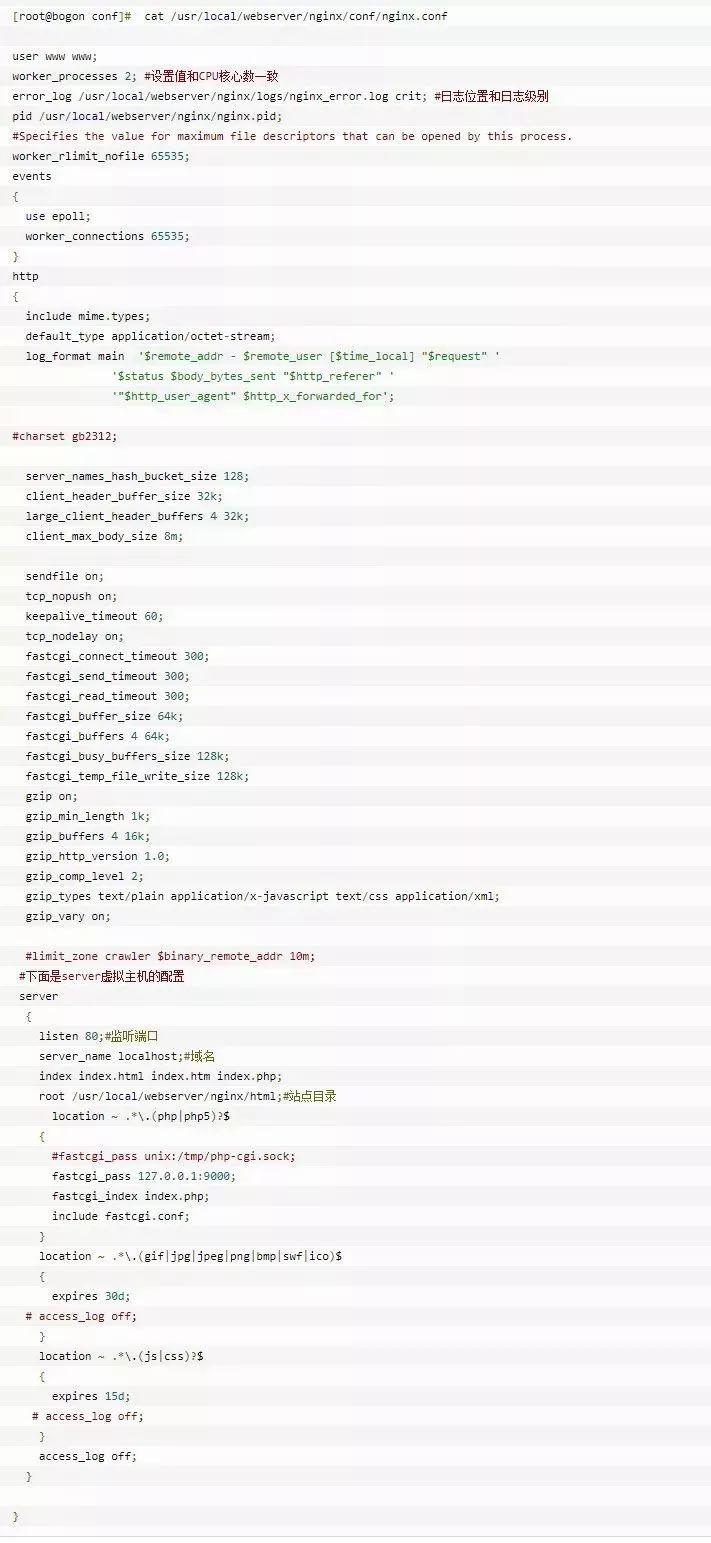

Configure nginx.conf and replace /usr/local/webserver/nginx/conf/nginx.conf with the following

Click for larger image

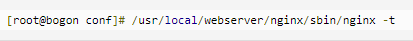

Check the correctness of the configuration file ngnix.conf:

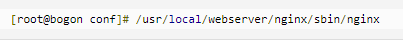

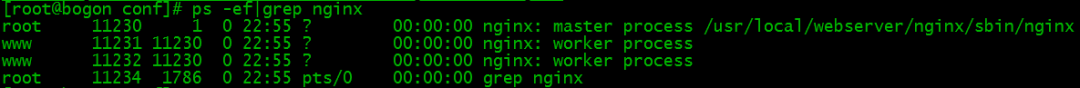

Fifth, start Nginx

The Nginx startup command is as follows:

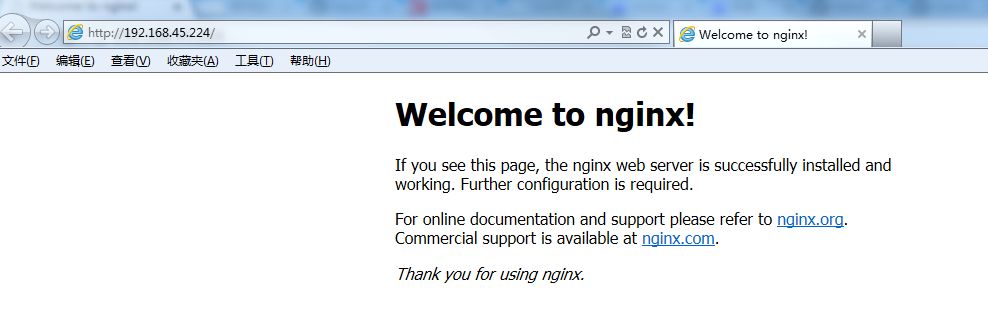

Six, visit the site

Access our configured site ip from a browser:

Nginx common instructions

1. main global configuration

Some parameters that nginx has at runtime that are independent of specific business functions (such as http services or email service agents), such as the number of work processes, the identity of the run, and so on.

Woker_processes 2 In the top main part of the configuration file, the number of worker processes in the worker role, the master process receives and allocates requests to the worker for processing. This value can be set to cpu's core number grep ^processor /proc/cpuinfo | wc -l, which is also auto value. If ssl and gzip are enabled, it should be set to the same as the number of logical CPUs or even 2 times, which can reduce I. /O operation. If the nginx server has other services, consider reducing it appropriately.

Worker_cpu_affinity is also written in the main section. In the case of high concurrency, the performance loss caused by on-site reconstruction such as registers due to multi-CPU core switching is reduced by setting cpu stickiness. Such as worker_cpu_affinity 0001 0010 0100 1000; (four cores).

Worker_connections 2048 is written in the events section. The maximum number of connections that each worker process can process (initiate) concurrently (including all connections to the client or backend by the proxy server). Nginx as a reverse proxy server, the calculation formula maximum connection number = worker_processes * worker_connections / 4, so the maximum number of connections on the client here is 1024, this can be increased to 8192 does not matter, depending on the situation, but can not exceed the subsequent worker_rlimit_nofile. When nginx is used as the http server, the calculation formula is divided by 2.

Worker_rlimit_nofile 10240 is written in the main section. The default is no setting and can be limited to the maximum limit of 65535 for the operating system.

Use epoll is written in the events section. Under the Linux operating system, nginx uses the epoll event model by default. Thanks to this, nginx is quite efficient under the Linux operating system. At the same time, Nginx uses an efficient event model kqueue similar to epoll on OpenBSD or FreeBSD operating systems. Use select when the operating system does not support these efficient models.

2. http server

Some configuration parameters related to providing http services. For example: whether to use keepalive, whether to use gzip for compression, etc.

Sendfile on enables efficient file transfer mode. The sendfile directive specifies whether nginx calls the sendfile function to output files, reducing the context switch from user space to kernel space. For normal applications set to on, if used for downloading applications such as disk IO heavy load applications, can be set to off to balance disk and network I / O processing speed, reducing system load.

Keepalive_timeout 65 : Long connection timeout, in seconds. This parameter is very sensitive. It involves the type of browser, the timeout setting of the backend server, and the setting of the operating system. You can start another article. When a long connection requests a large number of small files, the overhead of rebuilding the connection can be reduced, but if there is a large file upload, failure to complete the upload within 65s will result in failure. If the setup time is too long and there are many users, keeping the connection for a long time will consume a lot of resources.

Send_timeout : Used to specify the timeout period for the response client. This timeout is limited to the time between two connection activities. If this time is exceeded, the client will have no activity and Nginx will close the connection.

Client_max_body_size 10m The maximum number of single file bytes allowed by the client. If you have uploaded a large file, set its limit value

Client_body_buffer_size 128k buffer proxy buffers the maximum number of bytes requested by the client

Module http_proxy:

This module implements the function of nginx as a reverse proxy server, including caching (see also article)

Proxy_connect_timeout 60nginx connection time to backend server connection (proxy connection timeout)

Proxy_read_timeout 60 Timeout between two successful response operations with the backend server after the connection is successful (proxy receive timeout)

Proxy_buffer_size 4k sets the buffer size of the proxy server (nginx) to read and save the user header information from the backend realserver. The default size is the same as the proxy_buffers. In fact, the value of this command can be set smaller.

Proxy_buffers 4 32kproxy_buffers buffer, nginx caches responses from the backend realserver for a single connection, if the page averages below 32k, this setting

Proxy_busy_buffers_size 64k under high load buffer size (proxy_buffers*2)

Proxy_max_temp_file_size When proxy_buffers can't put the response content of the backend server, it will save part of it to the temporary file of the hard disk. This value is used to set the maximum temporary file size. The default is 1024M, which has nothing to do with proxy_cache. Greater than this value will be returned from the upstream server. Set to 0 to disable.

Proxy_temp_file_write_size 64k This option limits the size of each temporary file to be written when the cached proxy server responds to a temporary file. Proxy_temp_path (when compiling) specifies which directory to write to.

Proxy_pass, proxy_redirect see the location section.

Module http_gzip:

Gzip on : Enable gzip compression output to reduce network transmission.

Gzip_min_length 1k : Sets the minimum number of bytes of pages allowed to be compressed. The number of page bytes is obtained from the content-length of the header. The default is 20. It is recommended to set the number of bytes larger than 1k. If it is less than 1k, the pressure will increase.

Gzip_buffers 4 16k : Set the system to get several units of cache for storing gzip compressed result data streams. 4 16k represents 4 times the application memory in 16k units with the original data size installed in units of 16k.

Gzip_http_version 1.0 : Used to identify the version of the http protocol. Early browsers did not support Gzip compression, users will see garbled characters, so in order to support the previous version plus this option, if you use Nginx reverse proxy and expect When Gzip compression is enabled, since the end communication is http/1.0, set it to 1.0.

Gzip_comp_level 6 : gzip compression ratio, 1 compression ratio is the fastest processing speed, 9 compression ratio is the largest but the processing speed is the slowest (transmission is fast but consumes cpu)

Gzip_types : Matches the mime type for compression. The "text/html" type is always compressed regardless of whether it is specified or not.

Gzip_proxied any : When Nginx is enabled as a reverse proxy, it determines whether the result returned by the backend server is compressed or not. The prerequisite for matching is that the backend server must return the header header containing "Via".

Gzip_vary on : has a relationship with the http header. It adds a Vary: Accept-Encoding to the response header, which allows the front-end cache server to cache gzipped pages. For example, Squid caches Nginx-compressed data. .

3. server virtual host

Several virtual hosts are supported on the http service. Each virtual host has a corresponding server configuration item, and the configuration item contains the configuration related to the virtual host. Several servers can also be created when providing a proxy for the mail service. Each server is distinguished by listening to an address or port.

Listen listen port, default 80, less than 1024 to start as root. Can be in the form of listen *: 80, listen 127.0.0.1: 80, etc.

The server_name server name, such as localhost, can be matched by regularity.

Module http_stream

This module implements load balancing of the client IP to the backend server through a simple scheduling algorithm. The upstream is followed by the name of the load balancer, and the backend realserver is organized in {} in the form of host:port options; If the backend is only one proxy, you can also write directly to proxy_pass.

4. location

In the http service, a series of configuration items corresponding to certain URLs.

Root /var/ defines the default website root location of the server. If the locationURL matches a subdirectory or file, root has no effect, usually placed inside or under the server command.

Index index.jsp index.html index.htm defines the default access file name in the path, generally followed by the root

The proxy_pass http:/backend request turns to the server list defined by backend, that is, the reverse proxy, corresponding to the upstream load balancer. You can also proxy_pass http://ip:port.

Proxy_redirect off; proxy_set_header Host $host;proxy_set_header X-Real-IP $remote_addr;proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; These four are set for the time being, if you go deeper, each one involves very complicated content and will pass Another article to interpret.

Regarding the wording of the location matching rule, it can be said that it is especially critical and basic. Reference article nginx configuration location summary and rewrite rule writing method;

5. Other

5.1 Access Control allow/deny

Nginx's access control module is installed by default, and it is also very simple to write. It can have multiple allow, deny, allow or prohibit access to an ip or ip segment, and then stop matching if any rule is met in turn. Such as:

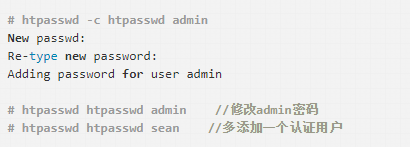

We also use the htpasswd of the httpd-devel tool to set the login password for the path of access:

This generates a password file that is encrypted by default using CRYPT. Open the two lines of comments above nginx-status and restart nginx to take effect.

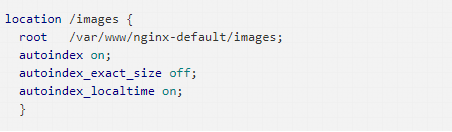

5.2 Listing the directory autoindex

By default, Nginx does not allow listing of entire directories. To do this, open the nginx.conf file and add autoindex on; in the location, server or http section. The other two parameters are also best added:

Autoindex_exact_size off; The default is on, showing the exact size of the file, in bytes. After changing to off, the approximate size of the file is displayed. The unit is kB or MB or GB.

Autoindex_localtime on; The default is off, and the displayed file time is GMT time. After changing to on, the displayed file time is the server time of the file.

Optical Cable Cross Connection Cabinet

Modular design of Optical Cable Cross Connection Cabinet(FDH,FOCC) provides the largest flexibility; satisfy the needs of the present and future development. The body using the stainless steel and surface using electrostatic spray so it has good corrosion resistance and anti-aging function, the wind protection class of the body achieves the IP66 level. The effect of defense dewing is excellent. The module tray can spin out of 90 degrees around the axis in the left front, and the bevel of the adapters within the module takes on 30 degrees. The clip-locked installation ensures the bending radius of the fiber directly and prevents the eyes from injury. Weld disk can spin out of 90 degrees, and then draw out, so it is convenient to construction, and also convenient to expansion and maintenance. Have doors in the front and back, have ample space for cabling, convenient to operation and maintenance. Have reliable device for fastness, peeling and grounding of the optical cables. Insulation resistance between high voltage protection earth and box20,000MΩ /500V (DC)

Communication Optical Cable Cross Connection Cabinet is interface equipment to contact trunk optical cable and wiring cable. It is compose of box, inside structure, optical fiber connector and some accessories. The function is to connect, store, dispatch and enlarge optical fiber. The material of box is cold-roll steel sheets, SMC fiber strengthen unsaturated polyester or stainless steel material. It has high resist destroy capacity, high strength, safety and stability. It has the device to bring optical cable, fix and protect. It has optical fiber termination device which could easy to splice, fix and maintain the optical cable fiber and optical cable fiber/optical fiber pigtail. At the same time, it has more space to store the surplus optical cable and fiber. Through the optical fiber connector, it could dispatch the fiber serial number of optical cable and change the transmission system route rapidly and expediently.

Cross Connection Cabinet, Optical Cable Cross Connection Cabinet, FDH, FOCC

NINGBO YULIANG TELECOM MUNICATIONS EQUIPMENT CO.,LTD. , https://www.yltelecom.com