Lei Feng network: According to the author of this article Slyvia & Trista, compiled from Facebook Code "360 video stabilization: A new algorithm for smoother 360 video viewing" by ARinChina .

This article mainly introduces 1) the new algorithm structure of Facebook video stabilization technology; 2) its working principle; 3) its performance performance; 4) the time-lapse photography algorithm.

From professional cameras to consumer handheld cameras, there are currently dozens of cameras on the market that can shoot 360-degree video, and their specifications and quality are also varied. With the popularity of these cameras, the scope and capacity of 360-degree video content is also expanding, and people are beginning to shoot 360-degree video on various occasions and environments.

However, it is difficult to avoid shaking and keep the camera stable when shooting, especially when shooting objects (such as mountain biking and hiking) with a handheld camera. So far, most video stabilization technologies have been designed for narrow-field video, such as traditional video shot with a mobile phone, but these techniques are not very useful when shooting 360-degree video.

As a result, Facebook decided to develop a new video stabilization technology for 360-degree video. Currently, this technology is already in testing and is expected to support the Facebook and Oculus platforms.

Facebook named the new technology a “transformation rotation†motion model. Hybrid 3D-2D technology is used to optimize model parameters and make 360-degree video smoother. With the same video quality, it can reduce the bit rate by 10% to 20%, effectively increasing the efficiency. In communication and computer terms, the bit rate indicates how much data can be transmitted in a unit of time.

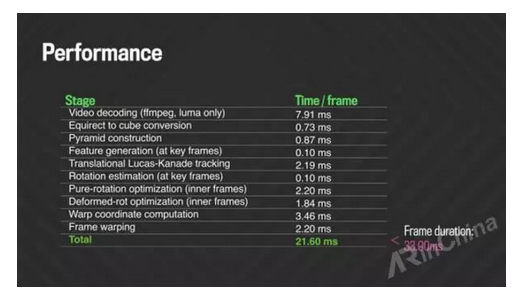

On standard machines, this new technology can stabilize 360-degree video at less than 22 milliseconds per frame. In fact, this stability is faster than using normal video playback.

In addition, Facebook's new technology also allows 360-degree video to advance fast, playing a lengthy video (such as long-term cycling) in a faster, more playable way.

In order to achieve this function, Facebook developed a 360-degree time-lapse photography algorithm in addition to the main stability algorithm. Over time, it changes the timing of the video frame's time stamp to balance the camera's rate.

| Create a new algorithm structure: Mix 3D-2D + Deformation rotation modelMost of the current video stabilization algorithms use the same structure: tracking the motion in the video, looking for an adapted motion model, making the motion more stable and producing a stable output frame. The main difference between algorithms is how to model motion in video.

Most of the algorithms are designed for narrow-field video, using a single parameterized two-dimensional motion model, such as a full-frame perspective (single-article) distortion. Full-frame refers to the size of the sensor in the camera, which is the same size as the original film and is 36x24mm.

Although these methods are simple and effective, overly simple models cannot describe complex motions such as parallax and jitter in the foreground and background.

More advanced algorithms use more flexible motion patterns, but they only exist in academic publications. While these advanced algorithms can handle more complex motions, they also limit the flexibility of video shooting to avoid visible geometric distortions.

Another type of algorithm operates in a three-dimensional environment. By reconstructing the camera trajectory and the geometric model of the scene, a stable video is inferred in a three-dimensional environment. Because of the use of more accurate models, these algorithms have a higher level of smoothness. However, the three-dimensional reconstruction method operates slowly and is unstable.

Therefore, Facebook's hybrid 3D-2D stable architecture formally combines the advantages of the above two types of algorithms. This architecture only uses three-dimensional analysis within a few seconds of the keyframe, it is not completely reconstructed, only the relative motion is estimated, and it is easier to solve the problem of operational stability .

The usefulness of the three-dimensional algorithm is that it can tell whether the object being photographed is rotating or translational .

For the internal frames (the remaining frames between the key frames), Facebook uses a two-dimensional method to optimize, using a new "deformation rotation" mode to make video motion as smooth as possible.

The Distortion Rotation mode is similar to a global cycle but allows slight local deformation. Facebook optimizes model parameters so that it can handle and undo some degree of translational shake (such as holding the camera up and down when walking), rolling shutter artifacts, lens deformations, and stitching. Like (stitching artifacts).

Therefore, to recognize the advantages of the hybrid 3D-2D structure :

Accuracy : More accurate than pure two-dimensional methods because of the use of more powerful 3D analysis to stabilize keyframes.

Robustness : More stable than pure three-dimensional methods because Facebook uses 3-D analysis to estimate relative rotation without having to completely reconstruct. The so-called "robustness" refers to the control system's ability to maintain certain other properties under certain (structure, size) parametric perturbations.

Regularization : Fixed keyframes provide a regular parameter for two-dimensional optimization of the inner frame, which can limit the deformation rotation model and avoid swing artifacts.

Speed : The performance of the hybrid architecture is faster than simply performing 3D analysis or 2D optimization.

| How to work?Similar to the existing stable algorithm, Facebook also starts tracking from the motion feature points in the video. The feature point is not only the location identification of a point, but also shows that its local neighborhood has certain pattern characteristics.

Since the video input by Facebook uses a rectangular projection, the picture is highly distorted at both ends, and the frame number is converted to a less distorted cube map (using 256x256 resolution) to allow smooth motion tracking.

Due to the high quality of grayscale images produced by the tracking, Facebook was able to execute directly on the video brightness plane, avoiding wasted time due to conversion from YUV to RGB (YUV and RGB are color coding methods).

Facebook uses KLT tracking algorithms to track key points and uses an important concept -- key frames. Key frames are very important and can be used to estimate the relative rotation of the object being shot, providing regular parameters for 2D optimization. Keyframes are scattered at different locations in the video, with some areas being more dense and some less.

Facebook then uses a five-point algorithm to estimate the relative rotation between successive keyframes to estimate the relative rotation and translation between the two cameras. The five-point algorithm means that when the motion of the camera between two images is a purely translational motion, given five pairs of image corresponding points, the essential matrix can be determined linearly.

The advantage of using a 3D perception algorithm to estimate rotation is that it can distinguish between translational and rotational motions. After completing an estimate of all keyframe rotations, it is inverse transformed to align with the first frame of the video.

Now that all the keyframes have stabilized, they are locked and the attention is paid to the rotation of the intermediate frames.

As mentioned earlier, the two-dimensional optimization method is used for the intermediate frame, not the three-dimensional analysis method. The goal of optimization is to make the trajectory of non-key frames as smooth as possible. Since the rotation of the middle frame has been fixed, the provided regular parameters can be used to converge the trajectory of non-key frames.

When solving camera shake problems, a certain amount of jitter often remains, such as a small amount of translation, rolling shutter, suboptimal lens correction, and splicing artifacts as mentioned earlier. The purpose of Facebook is to add some flexible motion models that can be used to adapt and undo minor image distortions.

In the "deformation rotation" model, Facebook replaces the global single rotation with six local rotations scattered at different positions, allowing the local low frequency deviation to change from pure rotation to data.

This is crucial because it can prevent drift due to too flexible motion models. Facebook hopes to solve the problem by introducing more data than simply removing artifacts.

The purpose of Facebook's development of this new algorithm is to allow users to watch smooth and stable 360-degree videos. After all, users do not want to waste time waiting for video uploads. Therefore, even if this algorithm is applied to the GPU, Facebook also wants to be able to run on the CPU.

So, Facebook's approach is to pick an 88-pixel region, calculate its coordinates using the traditional bounding box algorithm, and use the bilinear difference operator for other regions.

This new algorithm is faster than the bounding box algorithm. Compared with the simple calculation using the bracketing algorithm, the new algorithm has almost the same display effect, but greatly improves the computational efficiency. The bounding box algorithm is an algorithm that replaces a complex shape with a simple graph.

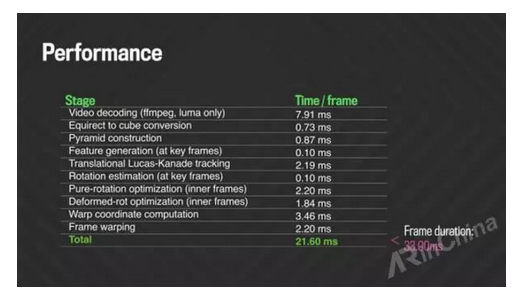

The following figure summarizes the performance of each algorithm stage, measuring single frame input/output 1080p resolution and time.

In fact, the steady speed of Facebook's new algorithm is faster than playing a video normally:

When playing a video, it takes about 30 milliseconds to display each frame, and it takes only 20 milliseconds to stabilize a frame. By improving the algorithm, Facebook can make live 360-degree video without jittering every frame.

In addition to speed, Facebook has also optimized its efficiency.

Because 360-degree video does not crop any captured scene, it can be restored to the original version, so the stable algorithm can increase the bit rate of 360-degree video (the higher the bit rate, the faster the data can be transmitted). But it won't have any effect on video playback, because people who have experienced 360-degree video are mostly accustomed to rotating views.

The stabilization algorithm can effectively save bit rate. The following figure shows the consumption of bit rate when the x264 library (a freeware library) encodes video to H.264/MPEG-4 AVC format. Orange represents skiing and blue represents stop. rest.

As shown, the probability of bit rate savings is between 10% and 20%, depending on the encoder settings.

Because Facebook's new algorithm makes 360-degree video look very smooth, it can be used to create accelerated-delay video. Creating a time-lapse 360-degree video requires deleting only some content, but each frame sequence should be able to connect.

However, one common feature of time-lapse photography is the smooth balance of camera speed. For example, when photographing a ski video, skiers sometimes speed up, stop for a while, and the camera speed changes.

In order to simulate a moving time-lapse camera, it is necessary to temporarily balance the speed and skip the rest.

To do this, first estimate the camera rate for each frame using a two-dimensional approximation and an average motion vector. Then, use the median time and low-pass filter to process the video twice. A low-pass filter is an electronic filter that allows signals below the cutoff frequency to pass, but signals above the cutoff frequency cannot pass through.

By using the camera to estimate the rate, the time stamp of the original video can be changed. In this way, you can create accelerated video and shorten the lengthy video.

The next step, Facebook, stated that the new algorithm is still being tested and hopes to get feedback from users. Users can now try to upload 360-degree videos and share high-quality, more comfortable videos with their friends.

Next, Facebook will work on improving the time-lapse photography algorithm, hoping to use real-time 360-degree video in the near future.

Lei Feng Network (search "Lei Feng Network" public concern) Note: This article by the ARC augmented reality (WeChat ID: arinchina) authorized the release of Lei Feng network, reproduced, please contact the authorization and indicate the author and source, not delete the content.